Output Organizer: Installation

levigo provides a Helm chart to install and operate Output Organizer within a Kubernetes cluster. The chart is verified to work on Azure, Rancher, Tanzu, OpenShift and many more.

A reasonable understanding of Kubernetes and Helm concepts is required to follow the installation instructions.

Architecture

Deployment

The diagram below provides a high level overview of a Output Organizer deployment. The Helm chart provisions all required pods:

loading...The worker pods can be expanded to include custom functionality for your workflow. By default, a default export worker is included.

Viewer

- viewer: The jadice web toolkit document viewer allows to view, modify and annotate documents.

Organizer

- organizer: The organizer pod provides the Output Organizer's core functionality - the ability to upload, assemble and modify documents prior to generating an output document.

- document storage: Since input and output documents have a very limited lifetime, they can be stored in an ephemeral system volume.

- runtime data: Document metadata and annotations are stored in a configured database. By default, the database is an embedded H2.

controller

- controller: The flow controller is part of the flow data processing and is responsible to queue and distribute long-running tasks.

workers

- worker-topdf: The to-PDF worker is a exporter that generates the desired PDF output document(s)

- Other optional workers can be deployed on demand. These workers can be included by modifying the job templates. For more information see additional configuration

Only viewer and organizer pods need a configured Ingress for an external URL. All communication through external systems should use the Fusion REST API.

Data flow

The next diagram shows how the public facing components are accessed and how the components interact.

loading...Preparing the installation

Prerequisites

- Kubernetes 1.14+

- Helm 3.1.0+

- Ingress Controller with sticky session support, e.g. NGINX

- Optional: sealed secrets

Registry access

Contact your levigo representative to get access to the repositories:

- Helm Charts: https://artifacts.jadice.com/repository/helm-charts/

- Container Images: http://registry.jadice.com

The Output Organizer Helm chart will then be available at:

https://artifacts.jadice.com/repository/helm-charts/fusion-output-organizer-x.y.z.tgz

Important: You will need the same credentials for both the Helm chart download and the container images. Manual download of the chart is not necessary for installation; it is only required for debugging purposes.

Note: A registry-secret is used by the Helm chart to access the Container images. This secret will be automatically created and receives its credentials provided by the

values.yamlconfiguration file. In the Helm configuration the credentials have to be set using the propertysecrets.imageRegistry.dockerconfigjsonorsecrets.imageRegistry.usernameandsecrets.imageRegistry.passwordfields.

Create Kubernetes cluster

Create Kubernetes cluster. For Azure, you can follow the instructions according to how to Deploy an Azure Kubernetes Service cluster.

Configure Kubernetes cluster

Perform preliminary steps to prepare the cluster for the new deployment, at least:

- Check/increase CPU and memory quotas

- Create a namespace

- Check/increase max nodes in autoscaler (optional)

Hostnames and CNAME records for external hosts

Output Organizer requires two externally reachable hosts:

myorganizer.mycompany.com - for the Output Organizer backend and frontend myviewer.mycompany.com - for the document viewer

It is good practice to define the hostnames upfront since they are part of the Helm configuration.

CNAME records (example):

myorganizer.mycompany.com → <myorg>.westeurope.cloudapp.azure.com

myviewer.mycompany.com → <myorg>.westeurope.cloudapp.azure.com

(Optional) Create a project to hold configuration values and trigger deployment workflow

Typically, we create a project repository providing the Helm configuration and set up a workflow to install/update the cluster. Any repository and deployment mechanism that allows Helm commands will suffice. In our example, we assume a GitHub repository with an attached GitHubAction workflow. The project structure can be set up as follows:

Project structure for Helm deployment:

/MyHelmRepo

.github

workflows

deploy-output-org-workflow.yaml

output-organizer

my-values.yaml

Helm configuration

In general, please follow the steps described in the Kubernetes deployment of the Output Organizer Helm chart. In the following paragraphs, you can find additional remarks on specific topics.

Sealing Secrets

In case you are relying on Sealed Secrets, please make sure you encrypt the secrets with your Sealed Secrets Controller in the Cluster. For details on how the secrets are constructed, see the Kubernetes deployment in the Output Organizer Helm chart. Provide the namespace (as defined above) and the predefined names (as listed in Kubernetes deployment) when generating the sealed values. Afterward, enter the encrypted values in your values.yaml.

Image Pull Secrets

The Helm chart requires access to private container images.

By default, the chart will create the required image pull secret if you provide your registry credentials in values.yaml.

If you want to use an existing secret, add the following to your values.yaml:

# Global image pull secrets

imagePullSecrets:

- name: registry-secret

This references the secret created in the preparation steps above.

Note: This is the default value. You only need to override this if you already have a registry secret with a different name or want to use a pre-existing secret.

Ingress

The Ingress configuration for the Organizer should be configured to allow file upload with larger sizes and longer request times. The Viewer Ingress requires sticky sessions. If Sticky sessions are base on the use of cookies, make sure that the cookies are set for the base url of the viewer and not for individual sub paths. Other deployments do not require an Ingress. The Ingress Controller itself has to be configured in the cluster management.

Here is a sample configuration for the "fusion" StatefulSet using nginx:

# Global image pull secrets

imagePullSecrets:

- name: registry-secret

organizer:

# Resource configuration

resources:

requests:

cpu:

memory:

ephemeral-storage:

limits:

cpu:

memory:

ephemeral-storage:

ingress:

enabled: true

className: "nginx"

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: 100m

nginx.ingress.kubernetes.io/proxy-connect-timeout: "30"

nginx.ingress.kubernetes.io/proxy-read-timeout: "1800"

nginx.ingress.kubernetes.io/proxy-send-timeout: "1800"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/configuration-snippet: |

server_tokens off;

location /actuator {

deny all;

return 403;

}

hosts:

- host: my-organizer.mydomain.com

paths:

- path: /

pathType: Prefix

tls:

- secretName: <my-organizer-ingress-tls-secret-name>

hosts:

- my-organizer.mydomain.com

viewer:

# Resource configuration

resources:

requests:

cpu:

memory:

limits:

cpu:

memory:

ingress:

enabled: true

className: "nginx"

annotations:

nginx.ingress.kubernetes.io/affinity: "cookie"

nginx.ingress.kubernetes.io/session-cookie-name: "route"

nginx.ingress.kubernetes.io/session-cookie-max-age: "172800"

hosts:

- host: my-organizer-viewer.mydomain.com

paths:

- path: /

pathType: Prefix

tls:

- secretName: <my-organizer-ingress-tls-secret-name>

hosts:

- my-organizer-viewer.mydomain.com

# Resource configuration for workers

worker-topdf:

eureka:

endpoint: http://eureka-server.mydomain.com/eureka

resources:

requests:

cpu:

memory:

limits:

cpu:

memory:

controller:

resources:

requests:

cpu:

memory:

limits:

cpu:

memory:

Note: Reasonable default resource requests and limits are provided by the Helm chart. You can override these values in your

values.yamlif your workload requires different sizing.

CPU, Memory and Storage

Here services deployed by our fusion chat can be customized in terms of resources. we strongly recommend to adjust these values when scaling the application or to improve stability and performace. A sample configuration would look like this:

resources:

requests:

cpu: 500m

memory: 1024Mi

ephemeral-storage: "1024Mi"

limits:

cpu: 500m

memory: 1024Mi

ephemeral-storage: "1024Mi"

For sizing recommendations see fusion sizing.

(Optional) Database Configuration

In addition to the pre-configured MariaDB default database, Output Organizer supports a variety of Databases over JPA. This enables you to connect to existing database instances. The database can be configured using a JDBC URL, a Driver Class and a DB Dialect. Our configuration is set up similar to the Spring Boot JPA configuration. To help you set the correct values for your database we compiled a list of examples for the different databases:

driver-class (organizer.db.driverClassName) | jdbc-url (organizer.db.jdbcURL) | database-platform / dialect (organizer.db.databasePlatform) | Comment | |

|---|---|---|---|---|

| H2 | org.h2.Driver | jdbc:h2:mem:fusion | org.hibernate.dialect.H2Dialect | Default, only for use with a single organizer instance |

| MariaDB | org.mariadb.jdbc.Driver | jdbc:mariadb://mydb/fusion?pinGlobalTxToPhysicalConnection=true | org.hibernate.dialect.MariaDBDialect | |

| MySQL | com.mysql.cj.jdbc.Driver | jdbc:mysql://mydb/fusion?allowPublicKeyRetrieval=true | org.hibernate.dialect.MySQL8Dialect | |

| PostgreSQL | org.postgresql.Driver | jdbc:postgresql://mydb/fusion | org.hibernate.dialect.PostgreSQLDialect | |

| MSSQL | com.microsoft.sqlserver.jdbc.SQLServerDriver | jdbc:sqlserver://mydb;databaseName=fusion | org.hibernate.dialect.SQLServerDialect |

Installing the Helm charts

Installation

Follow the steps described in the Kubernetes deployment of the Output Organizer Helm chart. Basically, you need to perform the following steps:

- Add levigo registry using provided credentials

- Update helm repo

- Install charts from levigo registry with configuration defined in the previously created values.yaml file

# Add levigo registry using provided credentials

helm repo add levigo "https://artifacts.jadice.com/repository/helm-charts/" --username "<username>" --password "<password>"

# Update helm repo

helm repo update

# Install charts from levigo registry with configuration

helm upgrade --install \

--namespace <your-namespace> \

--create-namespace \

--values ./my-output-organizer/values.yaml \

fusion-output-organizer \

levigo/fusion-output-organizer

Verifying the installation

You can check the state of yor cluster using kubectl or any available cluster management UI. If all pods are running and report as ready, your installation is successful.

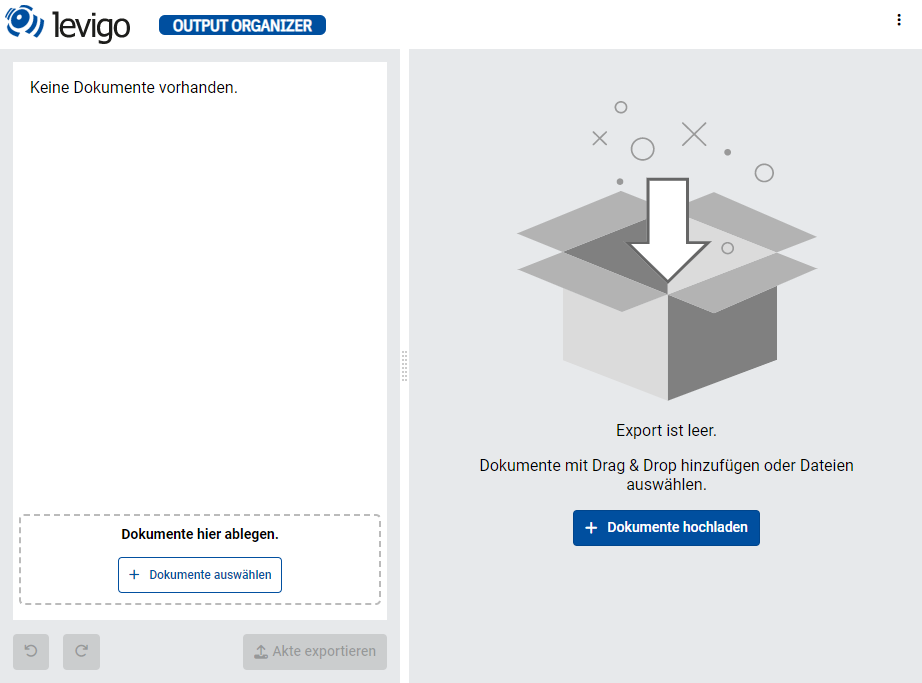

Now you can check the public-facing Ingress URL of the organizer pod in a web browser of your choice for example: https://my-organizer.mydomain.com/

Here you should see the landing page of the Output Organizer web application:

Troubleshooting Common Issues:

If pods show `ImagePullBackOff status:

# Check if registry secret exists

kubectl get secrets -n <your-namespace>

# Check pod events for detailed error messages

kubectl describe pod <pod-name> -n <your-namespace>

The most common issue is missing or incorrect image pull secrets. Ensure the registry-secret exists and contains valid levigo registry credentials.

Application startup typically takes 3-5 minutes. Monitor pod status with:

bashkubectl get pods -n <your-namespace> -w

All pods should show 1/1 Running status before the application is accessible.